Vaccines: From the cowshed to the clinic

Posted on April 14, 2016 by Microbiology Society

Vaccines are an essential component of public health, keeping people safe against disease. But how do they work, how are they manufactured and what are the challenges involved? We spoke to Dr Sarah Gilbert from the Jenner Institute to find out more.

Churchyards and corpses

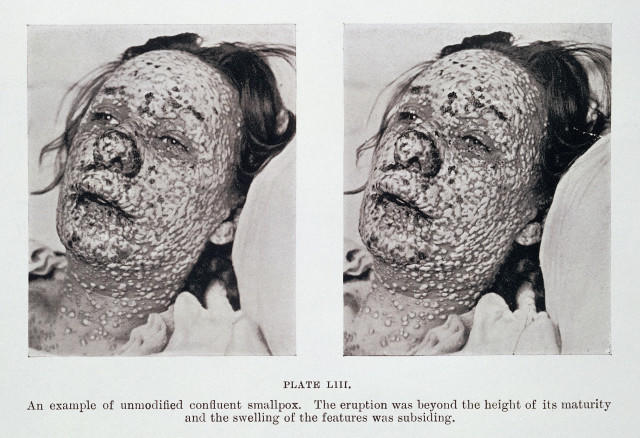

In the 1700s, smallpox was one of the word’s biggest killers, causing an estimated 400,000 deaths every year in Europe alone. About a third of the survivors went blind, and most were left with horrific, disfiguring scars. The disease held a special terror for people, as illustrated in this passage from Macaulay’s The History of England from the Accession of James II (1848):

”The smallpox was always present, filling the churchyards with corpses, tormenting with constant fears all whom it had stricken, leaving on those whose lives it spared the hideous traces of its power, turning the babe into a changeling at which the mother shuddered, and making the eyes and cheeks of the bighearted maiden objects of horror to the lover.”

But smallpox lesions, although objects of horror, were also a source of salvation for many people. By taking the fluid from an infected pustule and inserting it under the skin of a patient, doctors could often protect people against the deadly pox. The hope was that a mild but non-lethal infection would result, giving the person immunity against the disease.

This procedure, known as variolation, dates back as early as the 15th century in China, where smallpox scabs would be blown up the nostrils of patients. The practice was brought to Europe at the start of the 18th century and it quickly gained widespread popularity among physicians.

While variolation was largely successful, a significant proportion of those receiving the treatment (1-2%) died as a result, or spread the disease and caused another epidemic. But compared to the death rate from natural smallpox (~10-20%), the risks were worth it. At the time, the threat of smallpox was always near, a fate to be dreaded and feared by everyone from peasants to the aristocracy.

Unless, that is, you happened to be a milkmaid.

Milking in the cowshed

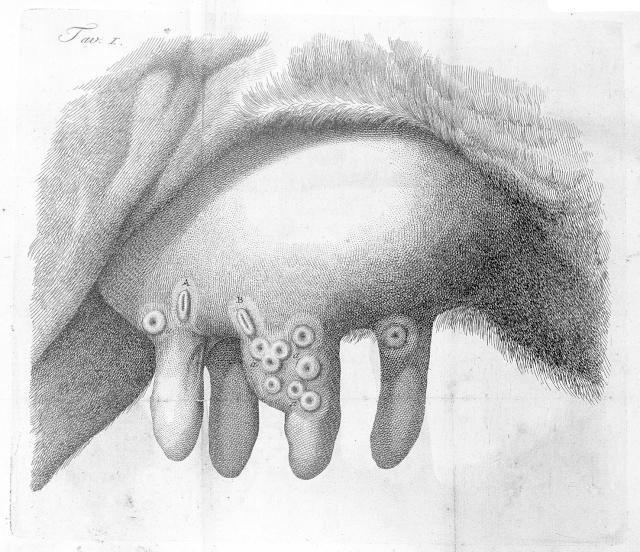

In the course of their work, milkmaids often became infected with a related but much less dangerous illness – cowpox. This disease could be transferred to humans through contact with a cow’s udders, causing lesions on the hands and arms. The cowpox and smallpox viruses were similar enough that infection with the first could provide immunity against the second.

Although the belief that milkmaids were somehow naturally safe from smallpox was fairly common, it wasn’t until the end of the 18th century that people began to test the idea of using cowpox to immunise humans against smallpox. The most famous of these (though by no means the first), was the scientist Edward Jenner

Jenner found a milkmaid called Sarah Nelms who had caught cowpox from

a cow named Blossom. Jenner used material from one of Nelms’ pocks to inoculate an eight-year-old boy called James Phipps – something that would never be allowed today – and afterwards showed that the boy was indeed immune to infection with smallpox.

Jenner named this technique ‘vaccination’ after the Latin for cowpox, Variolae vaccinae, and in the years that followed he pioneered the use of vaccines and relentlessly promoted them around the world.

Vaccines wanted: dead or alive

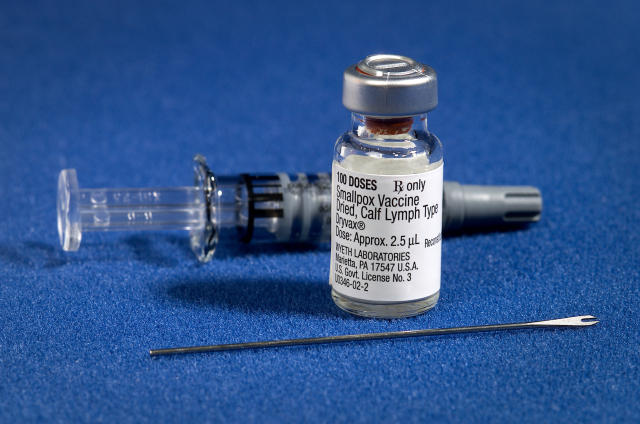

Fast forward a couple of centuries, and we now have successful vaccines for many diseases, including yellow fever, rubella and tuberculosis. Blossom’s hide now hangs in the library at St George’s University. And smallpox remains the only infectious disease that we have successfully eradicated in humans – all thanks to vaccination campaigns.

Today, the Jenner Institute in Oxford works to develop and test vaccines against global diseases like malaria, HIV, tuberculosis and flu. Modern vaccines are more sophisticated than pustule scrapings from a milkmaid, but they work in much the same way – by generating an immune response without causing disease.

“Some vaccines are killed pathogens or proteins that can’t cause infections,” says Sarah Gilbert, Professor of Vaccinology at the Jenner Institute. “While other vaccines are live and cause a weakened infection themselves. The body has to overcome the infection by generating an immune response, and this creates an immune memory that protects you from a real infection in the future.”

Each of these approaches has its own merits. Live vaccines generate a robust immune response because they cause a real, albeit diminished, infection. A weakened form of the pathogen is used to prevent it causing disease, but this can still happen in people with weakened immune systems, or very rarely if the pathogen mutates into a more virulent strain. Killed vaccines, on the other hand, are more stable and can’t cause infection, but they trigger a weaker response in the immune system of the patient.

“Something of a halfway house is to use a live, but replication deficient vaccine,” says Sarah. “The live virus that will infect the first cell it finds, but it can’t replicate. So it can’t spread through the body or to other people.”

Because these vaccines are live, they provoke a strong immune response, but they are still very safe because they only remain live for a few hours. Sarah’s lab works on a way of producing these in the form of ‘viral vectored vaccines’. These can be made for any viral or bacterial pathogen by taking the gene for an antigen (a protein in the pathogen that stimulates a good immune response) and inserting it inside a replication deficient virus that is safe and well understood.

“This means we can produce the same type of vaccine but with many different antigens,” says Sarah. “So we can standardise the production process to an extent and the whole thing works more quickly. But despite that, it’s still slow going.”

The long road to the clinic

Vaccines take a long time to develop because they have to be safe. They can’t cause disease or have any long-lasting side effects – especially because they are often given to children. For this reason, there are a lot of technical, ethical and regulatory hurdles to overcome before you can roll out a vaccine across the population. In fact, not all vaccines are especially difficult to produce per se, but the extensive testing required to make sure there are no unintended consequences from them takes time.

First, the vaccine itself is developed in the lab by looking through the potential antigen candidates in the pathogen. Next, the vaccine has to be tested in animals for a suitable immune response – and if the pathogen is particularly dangerous, like the Ebola virus, then only a handful of high-containment facilities will have clearance to do this.

If the results are promising, the next stage is to test the vaccine in humans. But before it can go anywhere near people, a new version has to be made with qualified materials under extremely controlled conditions, and exhaustively tested for contamination with other pathogens or dangerous chemicals. Then there are further toxicology studies and safety tests in animals, and finally, application for ethical and regulatory approval of the trials.

“We have to produce a huge dossier of information about what’s in the vaccine and what we plan to do with it,” says Sarah. “Once we have approval, we can start vaccinating. But to get to that stage will take about a year as a best-case scenario – and that’s just for the first clinical trial.”

The first clinical trial, phase 1, is usually in a low-risk group (aged 18-50, with no pregnant women) of around 30 people. If the target group is different from this (for example, in children or people in another country), there may be another round of phase 1 trials before phase 2 trials, which occur in about 1,000 people.

If all goes well and the vaccine is safe, phase 3 trials can take place in many thousands of people to actually test how effective the vaccine is at preventing disease. Sometimes trial vaccines don’t work very well at all – so it’s back to the drawing board to do the whole thing again.

Forewarned and forearmed

The average vaccine takes over 10 years to develop and has just a 6% chance of making it to market. This generally means that by the time an epidemic hits, it’s already much too late to start development. Doing the pre-clinical work and some early trials ahead of time is the only way we can be ready for disease outbreaks when they strike.

“If we’ve already tested a vaccine in several hundred people and have a stockpile available, then when an outbreak happens we can test efficacy straightaway,” says Sarah. “In the Ebola outbreak, we started doing clinical trials in November 2014, but efficacy trials didn’t start until the following May. In the future, we would hope to have the early work already done.”

Of course, this isn’t always possible. The Zika virus has been known about since 1947, but as the symptoms of an infection are usually so mild, no one thought there was any need for a vaccine. It was only when the current outbreak began that a potential link to microcephaly emerged and scientists began scrambling to develop treatments. Even so, one thing the Ebola outbreak highlighted is the need for established ethical guidelines about how to conduct trials in the midst of an outbreak, so that promising vaccine candidates can be tested as soon as they appear.

Despite the fact that we have now eradicated smallpox and have vaccines against many animal and human pathogens at our disposal, the global toll of infectious diseases is still high, especially in low-income countries.

Vaccination is still one of the safest and most effective ways to protect people from infectious diseases, but having guidelines, protocols, successful trials and stockpiles in place is essential for vaccines to be successfully developed and deployed. Being forewarned against disease epidemics isn’t enough or always even possible – we need to be forearmed.